Churn Rate | Why It Should Be Measured

An INFJ personality wielding brevity in speech and writing.

The churn rate of a code is a measure that tells you the rate at which your code evolves. It’s typically measured as lines of code (LOC) that were modified, added and deleted over a short period of time. It is an important software metric that helps measure the software development process and the quality of the software.

However, does having a high churn means that the developer has worked hard to write lines of code, rewrote it again, modified and made it better, Or vice versa?

It could be not! The churn rate is not directly proportional to the developer’s productivity. If that could be it, then it is a subjective way of measuring the software quality and developer’s productivity.

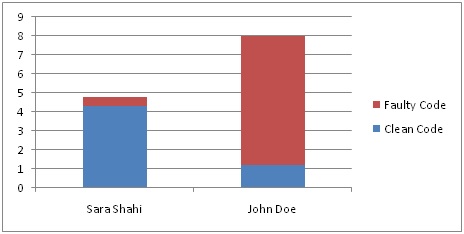

For example,

John has written a greater number of lines of code and churned it more when compared to Sara, but the quality lines of code have been written by Sara, with less churn rate and writing it right for the first time.

The churn rate needs to be tracked early in the process in order to save a lot of time and cost which otherwise would incur when it goes to the production phase. Developers would be spending a lot of time churning, bringing down the quality of code also being less productive.

There needs to be an objective metric to measure these and track the quality of the software and the value of these lines of code.

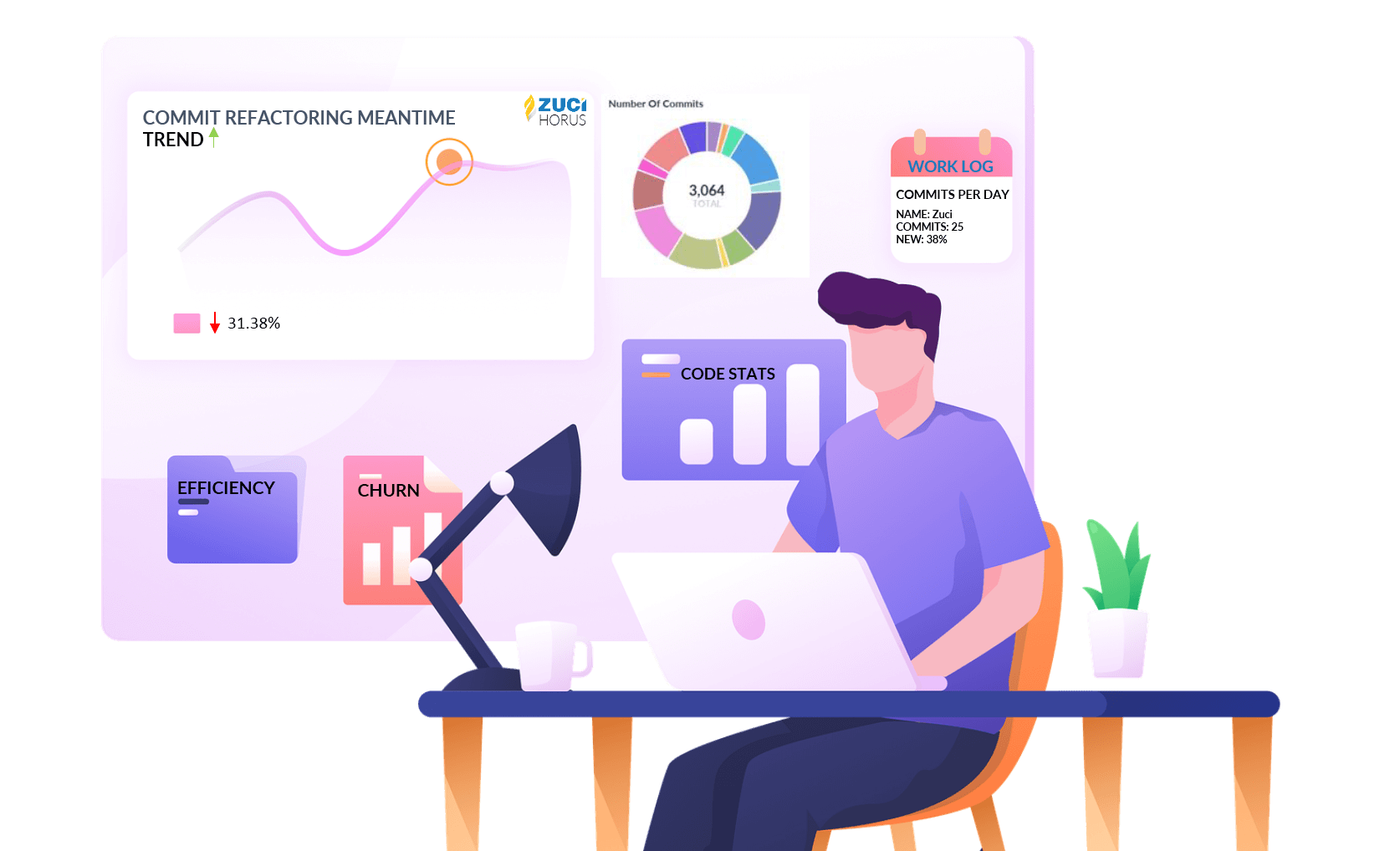

Horus, from Zuci, is an engineering management platform, with a set of metrics helps measure these factors and track the health score of the application. Head over to explore Horus

Learn more about Horus in the previous article.

Read also Class Fattiness

Related Posts