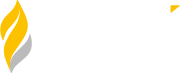

This is just one example of faulty software. Countless other incidents happen across industries every day.

Naturally, many of us might have pointed fingers at the QA team for not testing properly, as they had always been seen as the guardians of quality.

But does that apply to this scenario? Does the blame game quite apply to any such scenario in today’s agile-based software development lifecycle?

The answer is no.

At Zuci, we have been engaging with clients from diverse industries for software quality consultation, and we’ve noticed a common pattern emerging: Quality is being confined to the realm of the QA teams.

So, in this edition of Z to A Pulse, we want to talk about taking a

Holistic consulting approach to software quality

Hello readers, Welcome! This edition of Z to A Pulse monthly newsletter is brought to you by Keerthi V, Marketing strategist at Zuci Systems.

Case in point 1:

In one of the recent engagements, Zuci Systems partnered with a client who operated in the e-commerce space. This client provided solutions for postal operators, couriers, and logistics organizations worldwide. They played a crucial role in delivering an enhanced retail and digital customer experience, as well as enabling new revenue streams and optimizing delivery economics.

The sheer volume of transactions their software handled on a daily basis was staggering. It was evident that the success of their business rely on releasing reliable software in the hands of happy users.

To provide a seamless user experience, the client had clarity in terms of addressing the quality gaps in the product and was looking at ways to improve their QA processes across their teams.

They wanted an experienced, independent third-party to conduct a gap analysis of their current QA setup and processes and suggest areas of improvement and that’s when Zuci came into the picture.

It was an interesting 8-week experience because we delved deep into their suite of applications, not just from a QA standpoint but also from a software engineering processes perspective, taking a bird’s eye view of their architecture, code, infrastructure, and other relevant areas.

The outcome of our efforts was a 56-page report—a consolidated view of our approach to quality, covering everything from requirements to release. It provided our client with a roadmap for improvement, highlighting areas where they could enhance their processes and ensure a higher standard of quality.

Interested in reading the report? Just drop a hi at sales@zucisystems.com

At Zuci, we believe in

Quality is everyone’s responsibility

So, for any consulting or gap analysis engagements, we want to kickstart first by understanding the “quality culture” at the clients’ place.

Because good software quality is not about looking at testing alone. It’s about wearing the investigative hat and digging deeper into the software to uncover the unknowns.

In gap consulting engagements, we divide our approach into two parts: Test process assessment and Engineering process assessment. This allows us to comprehensively evaluate all the practices followed in the Software Development Life Cycle (SDLC).

Within the Test process assessment, our focus is on three key areas:

- Test Engineering

- Test Management

- Test Governance & Compliance.

For this client, we analysed data from JIRA, spanning a period of 12 to 14 months, to measure crucial metrics and gauge the maturity of the client’s processes.

Some findings included:

- Defining the importance of having an E2E test strategy

- Establishing the “Definition of Done” for a user story

- Ensuring requirement traceability to code through tools like JIRA, Bitbucket, and Azure DevOps

- Emphasizing the need for TDD

- Highlighting the importance of having dedicated test environments

- Conducting root cause analysis

To gain a better understanding of these findings, I reached out to Dhanalakshmi Tamilarasan, SDET manager at Zuci Systems.

Keerthi: Why is a well-designed E2E test strategy important?

Dhana: The purpose of an end-to-end (E2E) strategy is to clearly define and discover how a product behaves, both in terms of functionality and those non-functional aspects. The test strategy should be a live document, and regularly discussed with the entire team in a consistent manner.

A well-defined test strategy lays out the guidelines for how we approach testing to achieve our test objectives and execute the different types of tests we’ve got lined up in our testing plan. It encompasses various aspects such as test objectives, approaches, test environments, automation strategies and tools, as well as risk analysis with a contingency plan.

In a nutshell, the test strategy serves as an actionable plan to realize the overarching product vision.

Keerthi: Do we follow any best practices we follow at Zuci for designing effective test strategy?

Dhana: Couple of best practices followed here are:

- Brainstorm sessions with teams on all aspects of test before arriving at test strategy

- Peer review of test strategy with fellow leads

- Detailed review and sign off of test strategy from SME’s and Product owners

Keerthi: What should be an ideal “Definition of Done” for a user story?

Dhana:

According to Scrum.org, a definition of done (DoD) is a shared understanding of expectations that the current sprint (or increment) must meet in order to be released to users.

The Definition of Done is an agreed-upon set of rules/checklist that must be completed for every work item across the team/release.

Goals of DoD:

- Build the common understanding about quality and completeness

- Define the checklist of criteria to check in each user story

- Ensure the shipment of the agreed upon increment

Few examples of DoD but not limited to

- UT coverage of every module should meet X % before raising PR

- All the developed modules should follow the defined CICD process

- Min X % of in sprint automation to be achieved

- No Priority 1 & 2 bugs in any of the functionalities

- Non functional requirements met

- User document updated

Keerthi: Why requirement traceability to code through tools like JIRA, bitbucket and Azure DevOps become necessary?

Dhana: Traceability matrix plays an important role to achieve below key aspects

- To ensure the test coverage of any feature/user story and to enable test right things

- Enable easier defect triage, RCA and impact analysis

- Reduce rework by keeping the defects & traceability intact as a knowledge repo

Keerthi: What is TDD and what difference does it make?

Dhana: Test-driven development (TDD) is a software development process that relies on the repetition of a very short development cycle: first the developer writes a failing unit test case that defines a desired improvement or new function, then produces the minimum amount of code to pass that test, and finally refactors the new code to acceptable standards.

TDD is important because it helps to ensure that software is developed with quality and reliability in mind. By writing tests before the code, developers are forced to think about the design of their code and how it will be used. This can lead to more modular, reusable, and testable code. Additionally, TDD tests can be used to catch defects early in the development process, which can save a lot of time and money in the long run.

Keerthi: Most teams have no dedicated Test environment. Most of the testing is done by the developers themselves in their local environment – Why is it a bad practice?

Dhana: The challenges that comes with the lack of dedicated test environments are:

- Dev environment many times have mocked data or incomplete code/build which will not be final build to test

- Don’t give confidence on test coverage and quality

- Defect reproduction will be challenging

- Leads to increase in rework

- Continuous changes in the build will happen during development makes testing difficult

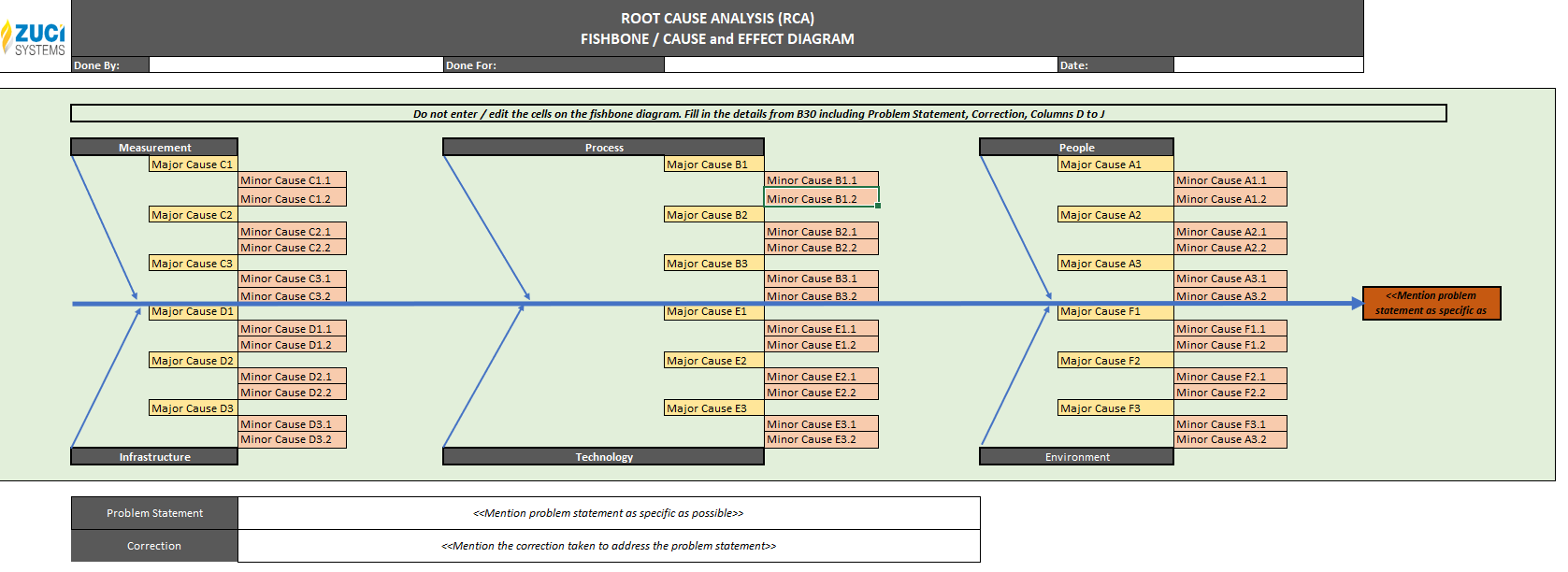

Keerthi: How to conduct an RCA? Can you share a template?

Dhana:

The RCA will be conducted by having all team members participate in a meeting or call to review each defect. Typically, the 5 WHY’s technique is used to determine the root cause, although there are other methods available.

Currently, RCCA (Root Cause and Corrective Action) is gaining significance. During the same call, we not only identify the root cause but also determine corrective actions. This is crucial to prevent the recurrence of the issue.

RCCA will be done in 2 parts

1.Why was it injected?

This is to identify the cause of the defect being introduced from the development side.

2.Why was it not detected?

This is to identify why the defect went unnoticed during testing, whether it was in UT or functional testing.

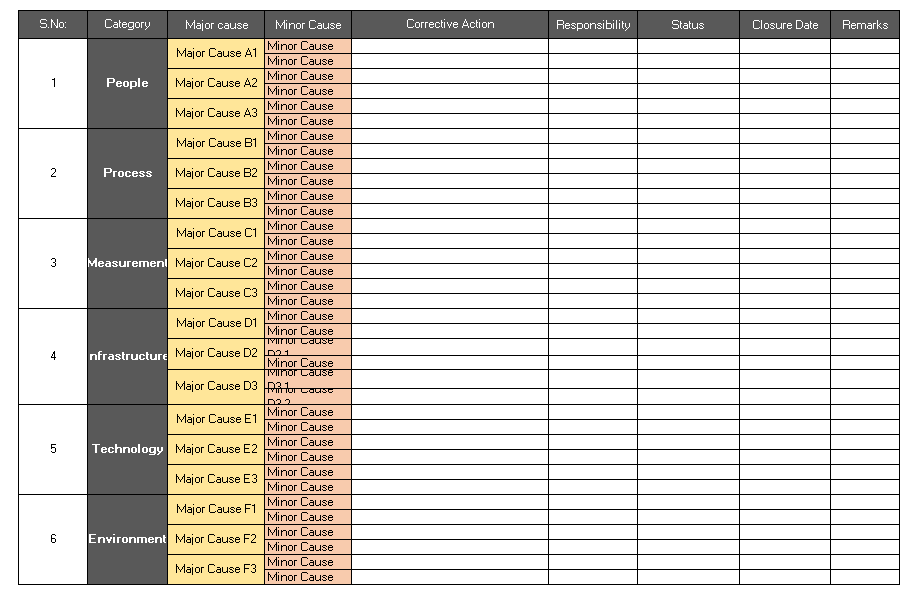

Here’s a sample snapshot of RCA template

That’s mostly about the test process assessment.. We’ll cover more on the engineering process assessment in the upcoming editions. Stay tuned!

Question For You:

What does quality mean for each team in the SDLC?

Thank you for reading! 😊

Let us know your comments or suggestions below. Please “Subscribe” to receive future editions highlighting some of the most exciting topics about engineering excellence.

If you like our content, show us your support by Subscribing to our quarterly newsletter, Sound bytes here.

Perfection. Always.