Since its inception in the ’90s, test automation has gone through teens, tweens, and twenties. Right from record and playback methodology to Artificial intelligence and machine learning-based automation, its evolution has been fascinating because of the constant debate around its effectiveness.

Despite its existence or utility, a few concepts around the topic still puzzle some of us. For instance,

- Test automation success

- Test automation: An elusive goal

- Projects falling behind schedule despite automation

- Role of automation in future testing

These are enigma from one’s own experiences, but it does sound familiar and fairly consistent. There could be some more, some have solutions, and some have to be solved.

…which brings me to the topic

“Test Automation Enigma: Zuci View.”

Hello readers, Welcome! This fourth edition of Z to A Pulse monthly newsletter is brought to you by Keerthi V, Marketing strategist at Zuci Systems.

I took up the above-mentioned topic with Sujatha Sugumaran, Head, Quality Engineering at Zuci Systems, and a firm advocate of product quality.

Now in Sujatha’s view…

Enigma #1: Why do only a handful of companies succeed in test automation though it’s been in practice for over two decades?

Get clarity and a clear strategy about what you want to achieve with test automation

Trying to automate tests while letting the rest of the process across the Software delivery life cycle (SDLC) remain in legacy is the fastest recipe for automation disaster. Upgrading the rest of the SDLC phase’s complements well when you set out to automate tests.

For instance, a lot of companies don’t encourage automating unit/integration tests, or don’t follow a testable approach to building a product. They would want to test at the UI level through automation. For them, automation means only functional or UI level.

These practices tend to follow a predictable path of chaos.

For a while, the engine seems to be on track. But when push comes to shove, rework happens. Eventually, they’re behind schedule.

The usual remedy to this challenge is just one thing — break the typical monolith process of automating everything and categorize it into portions of Unit, Integration, API, and UI.

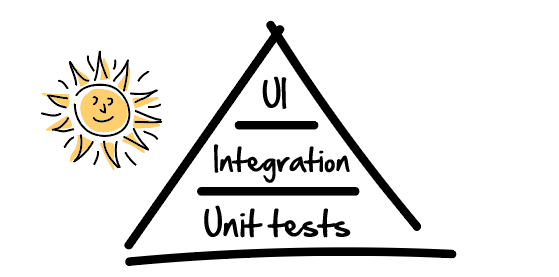

Adopting the Test pyramid approach

As Software Testing News put it,

The biggest chunk is that 70% of tests written should be unit tests, which are primarily written by developers to test their own code and catch bugs early before reaching QA teams. These tests are crucial as they provide a fundamental of test coverage that reduces the number of major bugs found later in the test cycle.

When climbing up the pyramid, the next 20% should be allocated to integration test, which is usually written as systems are integrated with other dependency and third-party systems, where mock services and automated virtualized environments could be utilized to fully fill in coverage where unit tests may be missed.

As we reach the top of the pyramid, the last 10% should focus primarily on functional UI testing. These tests are brittle as any UI enchantments can easily break test packs, therefore, should ideally be automated on building pipelines, allowing maintainability and further exploratory test efforts as iterative builds progress. Through the test pyramid model, teams can save cost and time in their test cycle, by increasing test coverage at the lower end of the pyramid, as well as eliminating any bugs exposed as we move upwards into business-centric UI test layers.

Enigma #2: Why shifting to automation is still not an easy call for most companies?

State of Test Automation 2022 reports – 30% of organizations are prioritizing the shift from manual to automated testing in 2022.

This highlights that switching to automated testing still remains a dream for some companies.

Sujatha lists down some key factors to consider when shifting to automated testing:

- Ecosystem support by building a testable product

Making sure that a testable product is built. By testable, I mean – having unique and readable UI elements, having a unique ID for all components (bypassing the need to write X path scripts), thus making the identifiers direct and the process of automating at the UI level easier even when the style and design changes at a later point.

- Understand a functional test automation complements manual regression testing and not replace

With automation, you’re only improving the efficiency of manual regression testing by automating it. Do not view automated regression testing in isolation

- Toolsets and processes you pick must suit your product’s release and testing process

Utilize dockers in place of virtual machines. It cuts down the maintenance effort and gives you control over your exact requirements.

Coming to process, if your current setup looks something like this: Ad-hoc build pass – Smoke test – move to production.

Then, integrating automated tests into this process will be a difficult and meaningless task.

Having in place a structured process like a sprint, where every activity is pre-defined, gives testers enough time to set up an automation suite, execute it, analyze test reports, and maintain it.

- Unlearn executing every test case all the time

Your application is vast, and you need not run a huge, automated suite every time. Identifying reports and testing impacted areas will be more than enough. It’s fundamental that your test automation framework is designed in such a way that — test cases can be split, and at any point, you can run only the necessary test cases.

Test automation is deterministic. Known inputs = predictable outputs.

If you’re trying to automate tests that are dynamic, where inputs and outputs are ever varying, stop and question yourself: Is this necessary? Do I really want to add more complexity to the existing application?

Be mindful of the level of complexity you want your test automation approach to have.

Enigma #3: If automated tests are faster, then why do automation projects fall behind schedule?

Automated tests are faster. You should also acknowledge that automation does not apply to new functional tests.

You’d need to test newer functionalities manually and see if it fails.

Projects falling behind schedule happen when unit tests are not automated, and testers end up finding 80% of the bugs manually in the QA phase, which should ideally have been arrested much earlier while you developed the component.

So, when testers’ time is spent on finding these bugs, the actual time required for API and business-level automation takes a hit, creating a pile of backlog for successive sprints.

Makes sense, right?

As someone who has first-hand experience of listening to customers list down their pain points like:

- Massive test case backlogs

- Code freeze doesn’t happen

- Not enough test coverage

- Behind the release schedule, etc

Sujatha’s team has been instrumental in setting up quality gates and streamlining a lot of their STLC activities, which remediated these quality issues.

Quick tip: Encourage shift-left testing and employ software development engineers in test (SDET) to cut down on dependencies and enable continuous delivery.

Enigma #4: With the array of test automation tools in the market, what’s the role of automation in future testing?

Automation tools like Cypress, Protractor, and Jasmine are making the future by enabling testers and developers to work together. This is a welcoming move towards enabling SDETS and getting the walls between teams blurred.

But to be truly agile, your automation should extend beyond UI test cases and sit well with tasks like — automating deployments and database upgrades.

In the future, there will be different skills required for hyper-automation, but there’s a crossover in skillsets. In fact, we at Zuci believe that Test automation and RPA have an overlap and are not totally different.

In addition, there are many AI/ML-based tools with self-healing capabilities and low-code/no-code-based testing tools today. Being cognizant of your existing test setup and what you want to accomplish with the help of these tools will help a long way in your automation journey.

To conclude,

We at Zuci believe automation is a great idea. To make it a good investment, apply the 80/20 rule.

80% of effects come from 20% of causes, and 80% of results come from 20% of the effort.

Find that 80 and 20 for your test automation. 100% automation is a myth. If you’re chasing the 100% automation goal, you’ll find yourself making very little or no progress, with all the efforts turning to dust.

Automation does not identify bugs. Automation can’t think like an end-user. It merely assures that whatever was working fine earlier still does. Or better, it helps avoid regression issues cropping up.

Automation does not do what testers used to do unless one ignores most things a tester really does. Automated testing is useful for extending the reach of the tester’s work, not to replace it.— James Bach

Testers must have to manually explore the application and check if it fails and the reason behind it to help fix it too.

As James Bach put it,

If testing is a means to the end of understanding the quality of the software, automation is just a means to a means.

Question for you:

What are your thoughts on the subjects raised in this edition of Z to A Pulse?

Let us know your comments or suggestions below. Please “Subscribe” to receive future editions highlighting some of the most exciting topics across engineering excellence.

Thank you for reading!

Read more if interested: