Hello readers, welcome! This article is part of the Z to A Pulse: Report spotlight, where we publish our thoughts on the findings from reputed names like: World Quality Report, Gartner, Forrester etc… We analyse their findings and trends with the help of our engineering leaders, then share the insights to help you achieve engineering excellence. Subscribe by clicking on the button above to read upcoming editions.

Into the topic…

As a product quality owner, you did your homework and got allotted budget for test automation.

…Researched new plug and play tools in the market

…Built the “perfect” automation framework

…But still no actual benefits…

Sounds familiar?

You’re not alone!

The latest (2022-23) world quality report says that,

We have been researching the test automation topic for many years, and it is disappointing that organizations still struggle to make test automation work. The survey results indicate that teams with a mature Agile process typically get more benefits from test automation activities.

The report further expands and defines the two very common challenges that companies face with test automation as the following:

Test automation is not always naturally integrated into the development process but organized as a separate activity, independent from development and testing itself.

Teams prioritize selecting the test automation tools but forget to define a proper test automation plan and strategy.

Hi! I’m Keerthi V, Marketing strategist at Zuci Systems. To gain a better rationale and understanding of the WQR findings, I picked our subject matter experts’ brains.

Meet our source:

Sujatha Sugumaran, Director, Quality Engineering at Zuci Systems

Saifudeen Khan, Director, Digital Engineering at Zuci Systems

Saif and Sujatha have spent more than 50,000 + hours on helping engineering teams deliver flawless product and seamless user experiences.

Here’s what they have to share about the topic.

Test automation is not always naturally integrated into the development process but organized as a separate activity, independent from development and testing itself.

Keerthi: Sujatha, this makes me want to wonder if engineering teams have not realised Agile-driven SDLC yet?

Sujatha: There are many different interpretations of Agile today. For some teams, Agile = getting things done quickly. This can lead to a situation where the testing effort is minimized, and the team ends up with a test automation backlog.

Typically, how test automation gets introduced into an Agile team is – by creating a separate team that is responsible for developing and maintaining the automation scripts. This team works in parallel with the development team, which is responsible for creating new features.

This approach is not inherently wrong. At some point, test automation is necessary, and this is a good way to start. However, the two teams eventually need to merge, and this is where problems often arise.

When the two teams are separate, they often work in silos and do not collaborate effectively. This can lead to disjointed efforts and a lack of communication. What happens as a result is – a huge backlog of test automation backlogs, and the team may not be able to keep up with the changes in the product.

To avoid these problems, both the development and testing teams should work together to identify the right tests to automate, and they should use a common automation framework. This will help to ensure that their test automation is effective and that the team can keep up with the changes in the product.

You might be interested in:

On failing Agile transformations…

Saif: To avoid agile transformation failures, keeping these things in mind should be helpful –

- Awareness of Agile is the bare minimum requirement in the organization. Once this is in place, it’s easier to build momentum.

- I always start with a matrix in my mind as to – who is responsible, who is accountable, who is the consultant, and who are the people that need to be kept informed. This can then be published to the stakeholders, which is a good practice to have.

- Next, it’s the estimation techniques. Agile gives you different methods like poker, intuition, etc. to estimate the efforts that lie ahead of you. It also gives you the flexibility to go back to the resource working on the particular sprint to get the effort estimation, and it’s important to validate their competitiveness. At this point, one can suggest, for example, going with the poker method. The estimation shown by the majority of the poker cards across the table is taken up, and the resources must work in that estimation category.

- Lastly, for every sprint, ensure that the story points, the velocity of the team, and priority tasks, are published to the stakeholders and others involved. This is so that everyone available in the meeting can discuss any deviation or ad hoc priority, and some capacity can be reserved for such tasks before you move on.

People should start believing that Agile is not a one-stop solution for all the problems, it will help you identify problems in the early stage so that you have time to correct them. It then depends on the stakeholders to take the necessary steps.

If you undermine these problems and leave them to co-exist without solving them permanently, you are going to fail.

Keerthi: How do we know how mature enough is company’s agile practices?

Sujatha: A lot of teams try to move fast, but sometimes they end up going in the wrong direction. This can happen when teams focus on speed at the expense of quality.

It is a red flag if you’re focused on building new features and not giving enough time on testing. This can lead to quality gaps that will eventually slow you down.

It’s important to set metrics for each process and track them over time. This will help you discover your leading and lagging indicators. If everything looks good, but you’re still seeing a lot of P0 P1 defects, then something is wrong.

And this is where teams would want to bring in a third-party perspective. An external QA consultant could come in and look at your overall engineering and release processes and give you some recommendations. They can help you identify areas where you can improve your quality and speed.

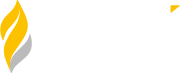

Here are the following areas that can be analysed:

Here’s a sample questionnaire to assess your team’s QA maturity.

Phase: Requirement

Assessment questionnaire:

- Are the requirements given to testers? How & when?

- Are the requirements articulated with enough detail in FRD?

- Are requirements documented with any assumptions?

- Does QA focal participate in requirement discussions/clarifications with Business Analyst/ Customer/ Developer.

- Are the requirements analysed and understood on both system and business perspective by developer and qa?

- Are the changes in requirements communicated to the testers? How & when?

- Requirement traceability – Is Requirement to code traceable? How?

- How is the requirements and scope change managed? Repository/Tools?

- Who will control(approve/reject) the change in requirement? (Kind of Change Control Board)

- Is the change in requirement captured in detail?

- How frequent the change in requirement is accepted without compromising the milestone?

- Is Design of Experiment available to find cause-effect relationship?

- Is the detailed documentation available for flag-based features?

- Is documentation available for test data preparation?

To view the complete assessment questionnaire on Test planning, Testing, General Analysis on Test Metrics and Automation, hit a “hi” at sales@zucisystems.com or comment below in this article.

Read more: Sujatha briefs – 4 most crucial software test metrics from her list.

Keerthi: I think not many companies are realising the benefits of test automation because they are mature enough in their test practises and have disjointed efforts. When do you know your org is test automation ready?

Sujatha: Your regression backlog should not be too big or a long roadmap. Try not to cover everything with automation, instead try to cover what is critical for the business. Build a regression automation backlog that can be achieved in shorter span of time and start consuming the scripts from week 1.

Then you should slowly bring automation into the sprint team so that they can work on current user stories and the regression master suite and achieve in-sprint automation.

Whenever a change request comes in, check for relevant test scripts that need to be modified. Find the business-critical test cases and update them in the master suite.

Merge the test automation team and the sprint team so that the regression team has continuous feedback in terms of test coverage, etc. This will help to ensure that the regression suite is kept up-to-date and that the team is able to quickly identify and fix any regressions.

For one of our clients, achieving in-sprint automation was one of the goals and we helped put out a roadmap that helped them gradually in achieving their goals

- Client’s sprint team consisted of Development + Manual testing team

- Zuci’s test automation (TA) engineers got integrated into the team

- TA engineers worked on the UAT automation suite

- Our team spoke to PO and understood business critical test cases essential for production sign-off

- Our team picked up from there and started working on the test cases backlog for automation

- UAT & Regression automation exercise spanned over a 6-month period

- Subsequently, we got integrated into N-1 sprint teams

- Finally achieved N/In-sprint automation

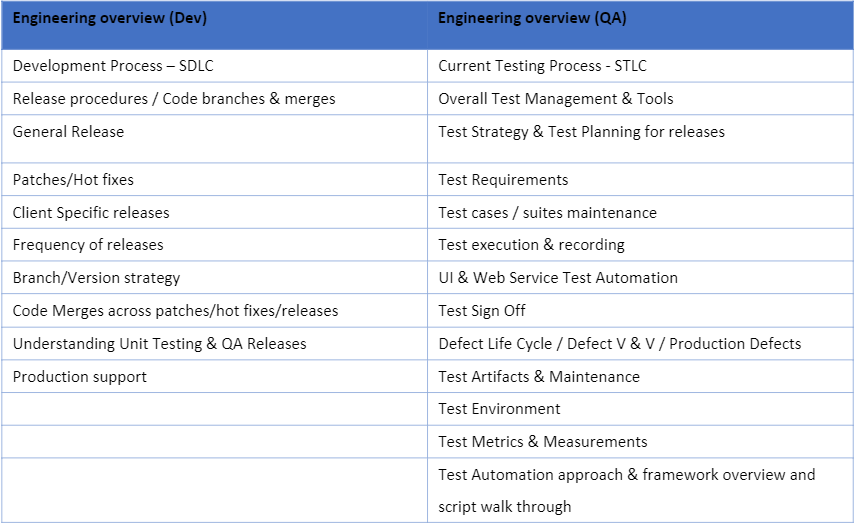

Keerthi: Surprisingly, the report tells us that not ROI but “Maintainability” to be the most important factor in determining company’s approach to test automation.

Sujatha: That’s true. We are seeing a shift in conversations around test automation on test maintenance and test tools. There are a few reasons to it. First, any software is constantly changing, so it becomes important that test scripts can be easily updated to reflect those changes.

Second, test scripts can become complex over time, so it’s important that they be easy to understand and maintain. Third, test automation can be expensive, so it’s important to make sure that the investment is worthwhile.

Here are a few things that organizations can do to improve the maintainability of their test automation scripts:

- Using a modular approach to test automation

- Using well-defined naming conventions

- Using comments to explain the purpose of each test script

- Using a version control system to track changes to test scripts

- Creating a test automation documentation

Keerthi: What are some ways you think we could encourage test + dev together?

Sujatha: It should start right from requirement. For example, in the requirement phase – developers usually only care about tweaking modules, code or tech stack which fall within their scope.

But what can be done here is include test team and along with dev to analyse and foresee the impact of changes to requirements on the testing, take testability into consideration while designing, etc.

Testability – Developers can write code with testability in mind by using techniques such as mock objects and unique identifiers for UI/visual components. This makes it easier for testers during automations and helps to ensure that the software is more easily testable.

Developers can break down complex tasks into smaller chunks that are easier to test. For example, architecture of an application can be designed in such a way that testing becomes easy and not involving multiple steps.

Saif:

I believe that quality is a shared responsibility, not just the tester’s.

In a lot of teams, developers just push out garbage to the testing team and don’t take responsibility for their work. This totally goes against the spirit of Agile, because in Agile, if one team fails, the whole team fails.

Developers should validate their code as they’re writing it. When defining user stories, the whole team including developers, manual testers, and automation testers must be involved. This ensures that everyone has a say in the definition of the story and that the criteria for “done” are clear. If test automation is not included in the “done” criteria, then the regression backlog suite will pile up and come back to haunt the team a few years down the line.

We should encourage teams and reward those where devs and testers work together. This will help teams see quality as a shared responsibility. In my experience, I have seen this do the magic and it will also help to ensure that the software is released with fewer bugs and that the team can deliver value to the customer faster.

Teams prioritize selecting the test automation tools but forget to define a proper test automation plan and strategy.

Keerthi: Sujatha, does the above mean: For a lot of teams, a plan is just another formality?! How are we reviewing “test plan” today and give a go ahead?

Sujatha: Traditionally, test plans are long, wordy documents. According to me, test plan can include – what are we going to be testing for this release based on the user stories and their impact. However, with the advent of test management tools, test plans can now be concise and lightweight.

Details such as- what are the types of testing planned, what are the test cases and when is it to be executed and what is to be assigned to whom can be stored in a test management tool like TestRail, Xray, Zephyr etc which are integrated to JIRA, allowing teams to keep track of things and adapt any changes that may arise. Such integrations would also give analytics insight into the past releases so that the team can learn more about the defect trends and plan accordingly.

Keerthi: Any tips in picking test automation tools? I also think test automation’s success will mostly depend on people’s approach towards it and not toolsets. What’s your thoughts?

Sujatha: That’s right. Test automation tools that sync with the development environment is much helpful. For example, if the web app is developed in C sharp, writing test automation code in C sharp is beneficial because the logic is easy to understand and when we are stuck, developers are ready to help.

You’ll also have the support of tool ecosystem because we are not bringing in anything alien. Again, it’s not a must-have but a nice-to-have aspect of picking automation tools. Sometimes, switching to a different tech stack is necessary because the current one would lack maturity and the skillset availability to achieve certain things.

But again, success of test automation does not depend on toolsets alone. You have to be clear about your application and strategize:

- What are we automating?

- How are we automating?

- What to automate at what level (UI, API, Services)?

- Identify where it failed and understanding why

- Identifying right toolsets and people

Successful test automation journeys are not about tools and processes, but it is about people and their approach to solving problems. It paves way for everything else – Vasudevan Swaminathan, Founder & CEO, Zuci Systems

A lot of benefits can be promised by the commercial tools , but if you don’t know the “Why” behind what you are doing, then you are bound to fail sooner or later.

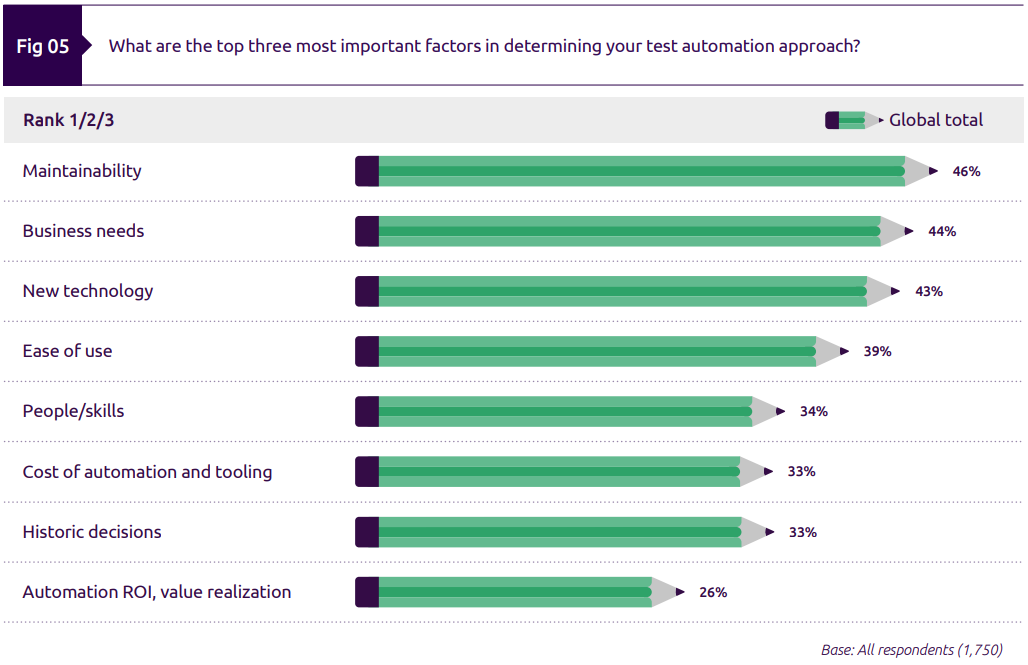

Here are some final thoughts from the report on: What should organizations focus on?

- Work earlier with quality automation experts.

- Automation starts as the requirements are being created; build an automation-first approach into the requirements and stories.

- Get automation requirements agreed on before you start to automate.

- Focus on what delivers the best benefits to customers and the business rather than justifying ROI. Review your tooling and frameworks on a regular basis.

- Plan a roadmap for at least the next three years.

- One tool doesn’t do everything. Pick the best tools for the job. Don’t try and make one tool do everything.

- Invest in people.

- Stop chasing after unicorns and work with the people you have – they know your business

Question for you:

How mature are your test automation practices?

- 30%

- 30-60%

- 60-100%

Thanks for reading! We’re excited about delving deeper into the insights from the World Quality Report in our future editions.

If you like our content, show us your support by – Subscribing to our quarterly newsletter, Sound bytes here. and by sharing this article with your team. Your support means the world to us!